Objectives & Main Features

The objectives of the project were to explore the performance difference of various stereo matching methods including Sum of Absolute Differences, Sum of Squared Differences, Normalized Cross Correlation and Semi-global matching (SGM), as well as the choosing of parameters such as kernel size. Intel Realsense D455 RGB-D camera was used to collect the stereo image pairs. The Intel Realsense RGB-D camera could emit infrared ray to assist the depth estimation by adding extra features to some surface without feature such as white walls. Therefore, whether the infrared ray could help generate better depth map will be tested and discussed.

Steps & Implementation

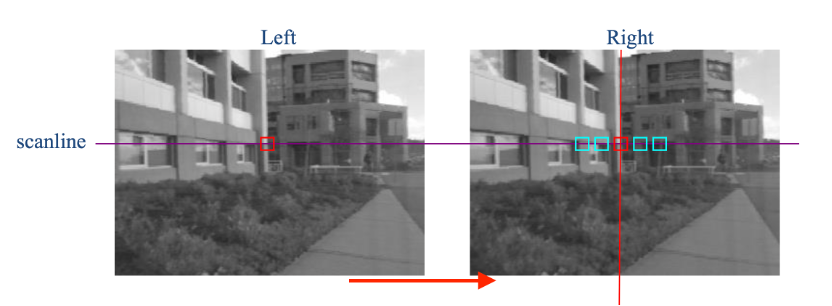

In general, the matching procedure could be executed as shown in the flowchart in Figure 2. The idea was to find the same point in the real world in both left and right image. Usually, as shown in Figure 1, a kernel (a square area) was firstly chosen in one image, for example, the left image. Then, starting from the same position as the kernel in the left image, a horizontally sliding kernel was created in the right image and the cost between the kernel in the left image and the sliding kernel in the right image was calculated. The cost function could be modified. The kernel position with the least cost in the sliding range, which could also be modified, in the right image was considered as a match to the left kernel. The pixel distance between the position of the left kernel and the one in the right image was the disparity. As the closer the object is, the larger the disparity is. The sliding range decided the closest distance that the depth map could be generated.

Three different cost functions, which were SAD, SSD and NCC, were implemented and the performance for each cost function will be discussed in the next section.

Sum of Absolute Differences (SAD)

$$\sum_{\left(i,j\right)\in W}\left|I_1\left(i,j\right)-I_2\left(x+i,y+j\right)\right|$$Sum of Squared Differences (SSD)

$$\sum_{\left(i,j\right)\in W}\left(I_1\left(i,j\right)-I_2\left(x+i,y+j\right)\right)^2$$Normalized Cross Correlation (NCC)

$$\frac{\sum_{\left(i,j\right)\in W}{I_1\left(i,j\right)\bullet I_2\left(x+i,y+j\right)}}{\sqrt[2]{\sum_{\left(i,j\right)\in W}{{I^2}_1\left(i,j\right)}\bullet\sum_{\left(i,j\right)\in W}{{I^2}_2\left(x+i,y+j\right)}}}$$Semi-global Matching (SGM) - Census Transform & Hamming distance

The previous methods used the sum of the cost for each pixel in the kernel between the left and right image to find the best match. In another words, the sum of the pixel value difference was used as the feature that the matching process referred to.

For the scene with little illumination change and with relatively high number of features, SAD, SSD and NCC may find pretty good matches. However, if the illumination condition was different in two images, the matching will be greatly influenced because the mean pixel value will be different even if for the same part of the image.

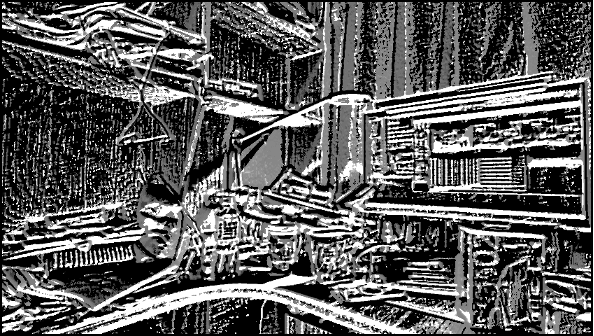

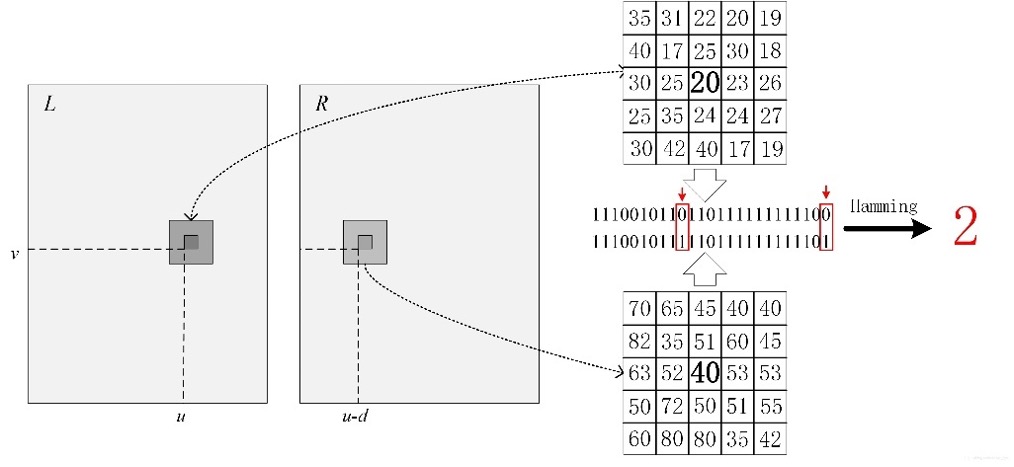

Census transform used the pixel difference between the certain pixel with the adjacent pixels as the feature for matching. Therefore, it could be considered the pattern of changing for each pixel was used. As shown in Figure 3, the census transform was to compare the value of the center pixel and the pixels around it. If the adjacent pixel was larger, encode ‘1’ while the center pixel was larger, encode ‘0’. Then, the binary code series of the kernel in left and right images did ‘XOR’ operation, the output was called Hamming distance, which could be considered as cost. The best match should have the smallest Hamming distance.

Results

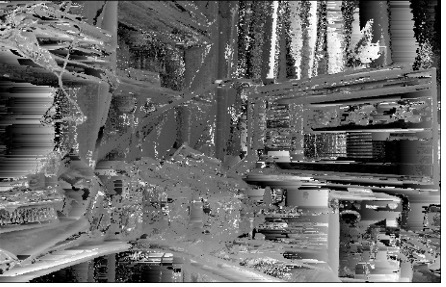

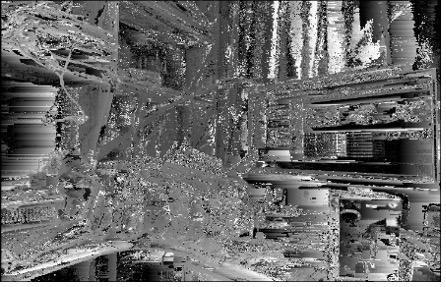

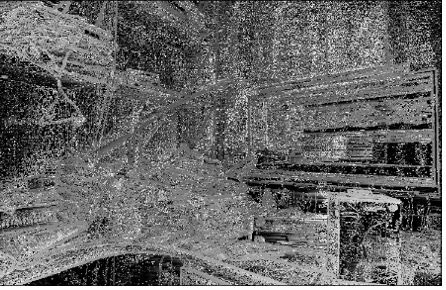

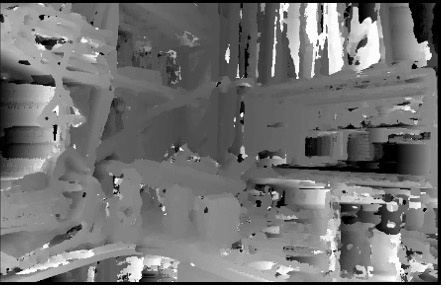

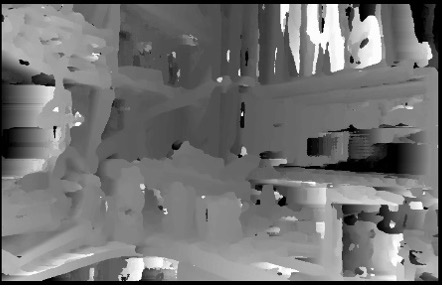

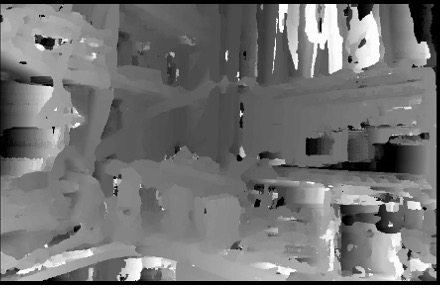

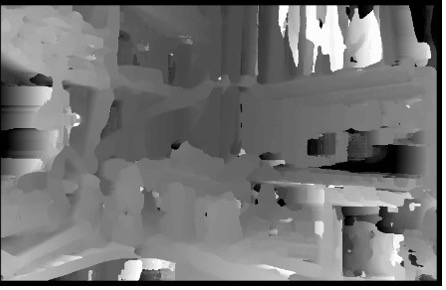

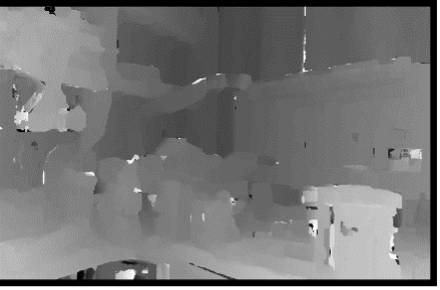

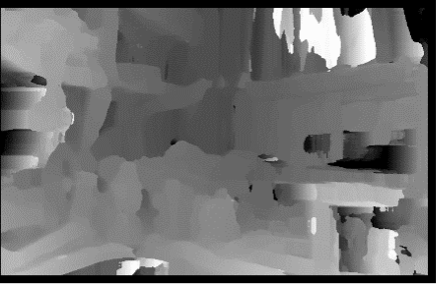

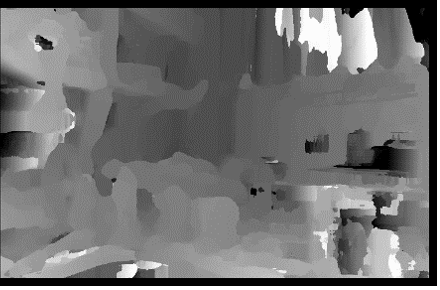

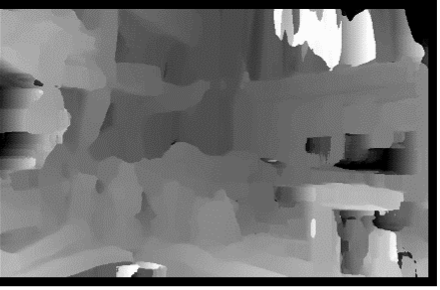

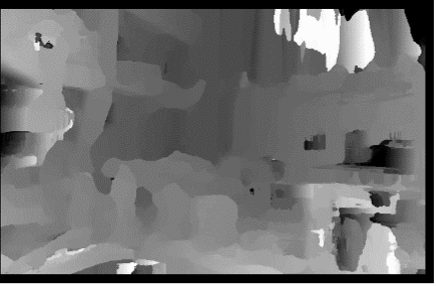

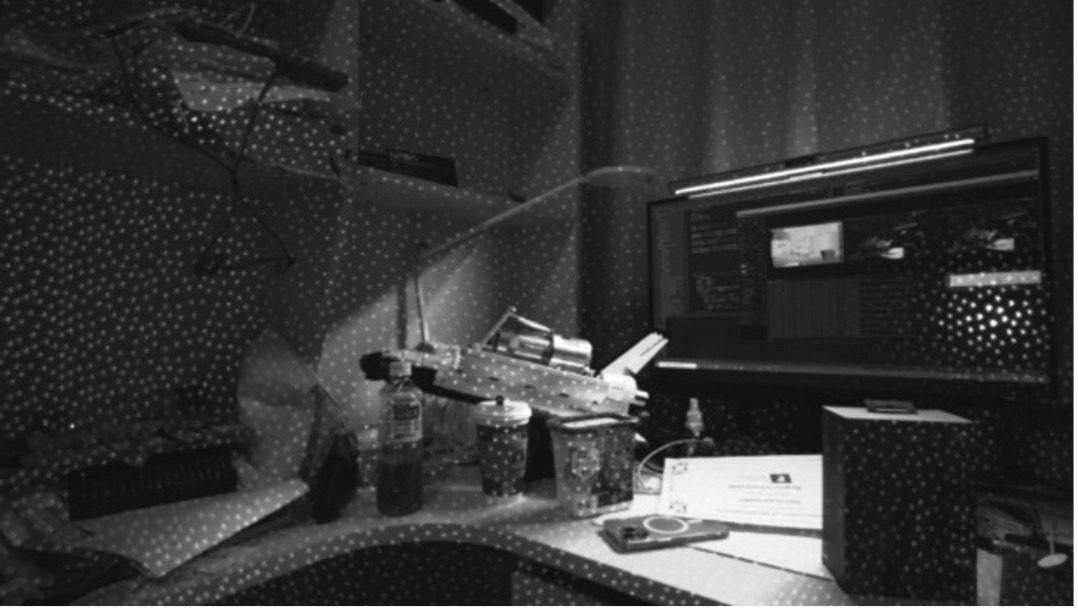

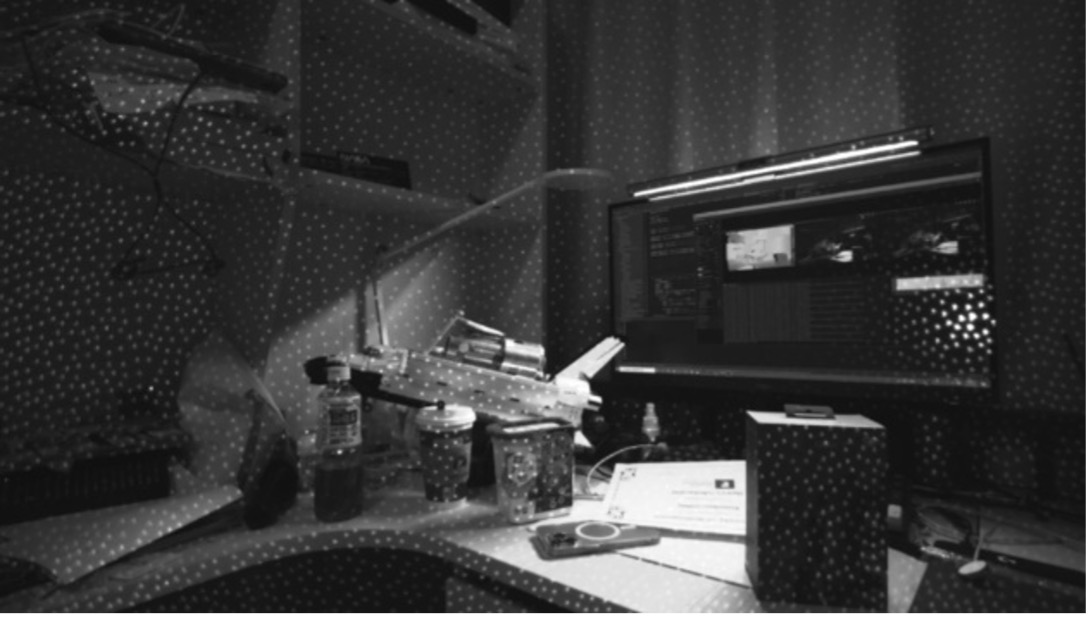

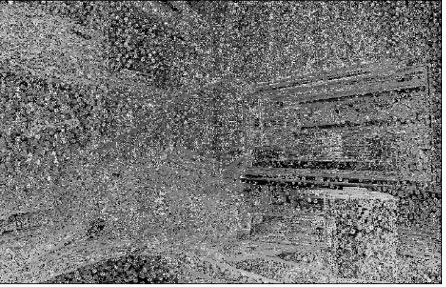

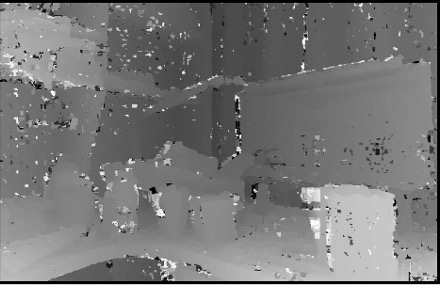

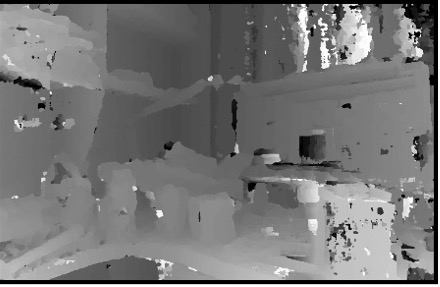

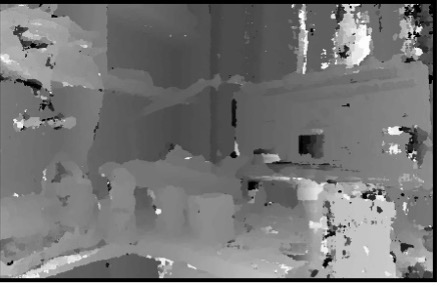

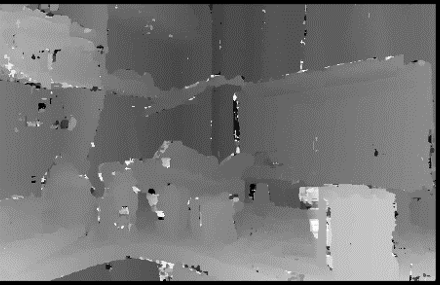

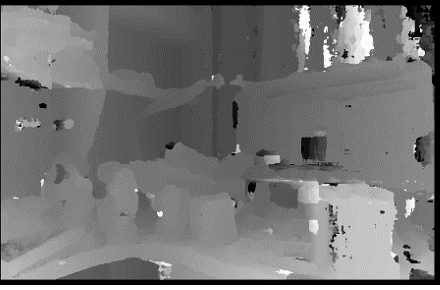

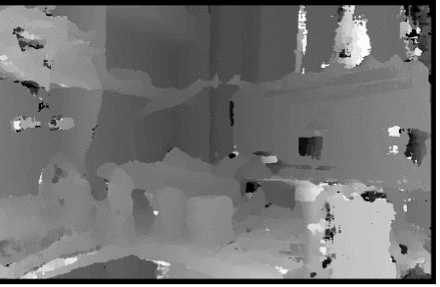

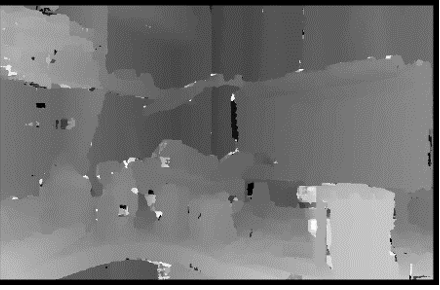

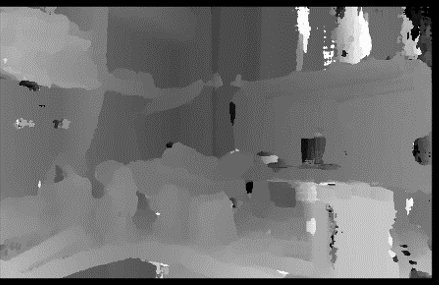

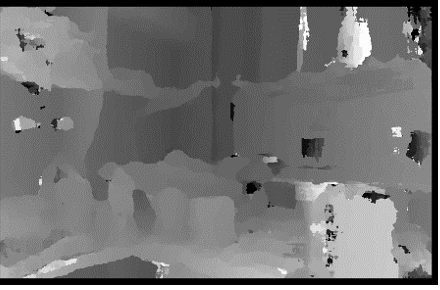

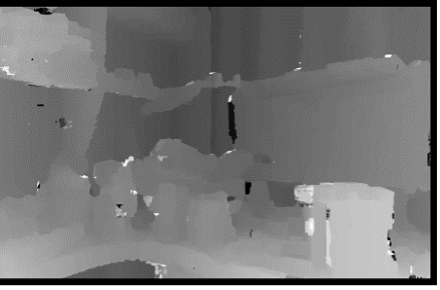

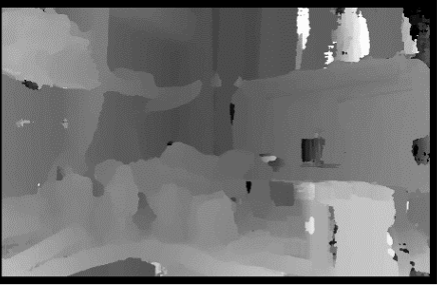

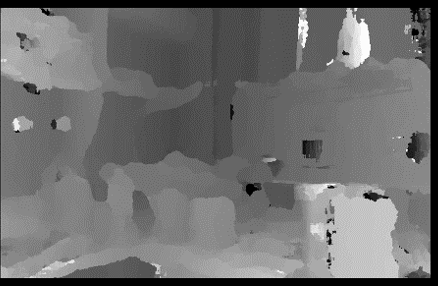

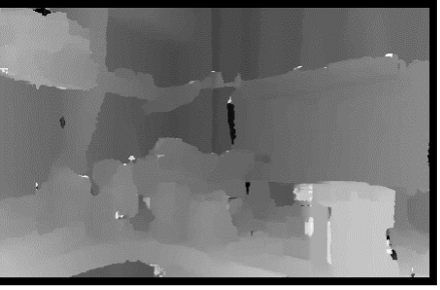

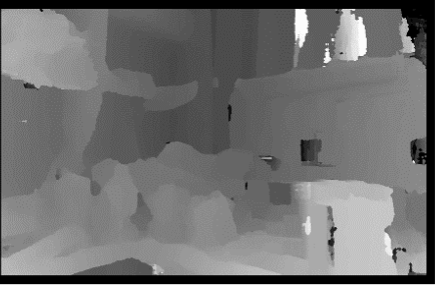

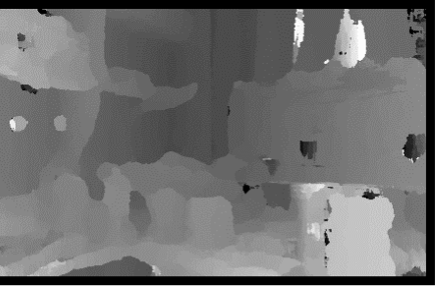

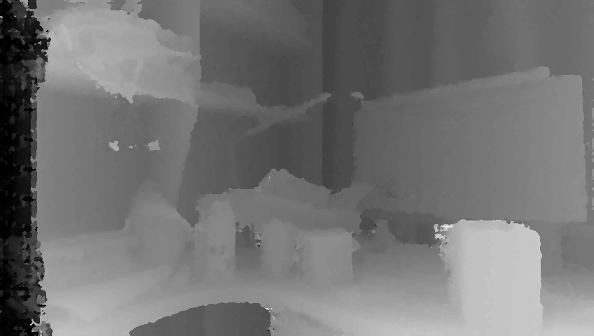

Three aforementioned cost functions were tested with different size of kernels. Moreover, as Intel Realsense RGB-D camera, which could actively emit infrared ray with a fixed pattern to assist on-chip stereo matching on the camera, was used to collect stereo images, the stereo matching performance with or without the assistance of infrared ray was tested and compared as well. The collected images were self-rectified before output from the camera. Therefore, the images were used directly for stereo matching. The resolutions of the images for matching were 848*480. Figure 4 and Figure 5 were the image captured without infrared ray while Figure 6 and Figure 7 were the image captured with infrared ray on. The largest disparity was set to 100 for completed depth calculation.

Without Infrared Laser: